Apache Spark is a powerful, open-source distributed computing system that is widely used for big data processing and analytics. If you want to run Apache Spark on Ubuntu using UTM on a Mac, this guide will walk you through the process step-by-step.

Prerequisites

- A Mac with UTM installed.

- An Ubuntu virtual machine running in UTM.

- Internet access within the Ubuntu VM.

Step 1: Update and Upgrade Ubuntu

Start by updating the package index and upgrading existing packages:

sudo apt update && sudo apt upgrade Fig. update/upgrade your linux.

Fig. update/upgrade your linux.Step 2: Install Java

Apache Spark requires Java to run. Install OpenJDK:

sudo apt install default-jdk -y

Verify the installation:

java -version

Fig.Checking java version after installation.

Fig.Checking java version after installation.

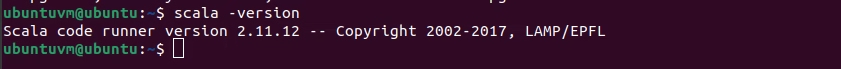

Step 3: Install Scala (Optional)

While Spark runs on the Java Virtual Machine (JVM), it’s often used with Scala.

sudo apt install scala -y

Fig.Installing scala.

Fig.Installing scala.Verify Scala installation:

scala -version

Fig.Checking scala version.

Fig.Checking scala version.Step 4: Download Apache Spark

Visit the official Apache Spark download page to get the latest version of Spark. Alternatively, use the following command to download the latest stable release (replace the URL with the appropriate version):

wget https://downloads.apache.org/spark/spark-<version>/spark-<version>-bin-hadoop3.tgz

or

curl -O https://archive.apache.org/dist/spark/spark-3.2.0/spark-3.2.0-bin-hadoop3.2.tgz

Extract the downloaded file:

tar -xvzf spark-<version>-bin-hadoop3.tgz

or

sudo tar xvf spark-3.2.0-bin-hadoop3.2.tgz

Move the extracted folder to /opt:

sudo mv spark-<version>-bin-hadoop3 /opt/spark

or

run the below command sequentially.

$ sudo mkdir /opt/spark

$ sudo mv spark-3.2.0-bin-hadoop3.2/* /opt/spark

$ sudo chmod -R 777 /opt/spark

Step 5: Set Environment Variables

Edit the ~/.bashrc file to set environment variables for Spark:

sudo vim ~/.bashrc

Add the following lines at the end of the file:

# Spark Environment Variables

export SPARK_HOME=/opt/spark

export PATH=$SPARK_HOME/bin:$PATH

export JAVA_HOME=$(readlink -f /usr/bin/java | sed "s:/bin/java::")

or

export SPARK_HOME=/opt/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

# Save the file, to bring the changes in effect.

# Exit from the vim editor using the following command.

:wq

Reload the environment:

source ~/.bashrc

Follow the Step 6. if you want to install python and run the PySpark.Step 6: Install Python and pip (Optional)

If you plan to use PySpark, ensure Python and pip are installed:

sudo apt install python3 python3-pip -y

Verify the installation:

python3 --version

pip3 --version

Install PySpark:

pip3 install pyspark

Step 7: Verify the Installation

Run the Spark shell to confirm the installation:

For Scala:

spark-shell

For PySpark:pyspark

If the Spark shell or PySpark starts successfully, your installation is complete.

Start the standalone master server.

$ start-master.sh

Start the Apache Spark worker process.

$ start-slave.sh

Spark Web UI

Browse the Spark UI to know about worker nodes, running application, cluster resources.

use

http://localhost:8080

http://localhost:4040

Step 8: Run a Sample Application

Create a test script (e.g., test.py) to validate the Spark setup:

from pyspark.sql import SparkSession

# Initialize SparkSession

spark = SparkSession.builder.appName("TestSpark").getOrCreate()

# Test DataFrame

data = [("Alice", 34), ("Bob", 45), ("Cathy", 29)]

columns = ["Name", "Age"]

df = spark.createDataFrame(data, columns)

df.show()

Run the script:

python3 test.py

You should see the test data output displayed in the terminal.

Conclusion

You have successfully installed Apache Spark on Ubuntu in a UTM virtual machine on your Mac. You can now start using Spark for your big data projects. If you encounter any issues, consult the Apache Spark Documentation. Comment down if you are facing any issues, i will try to resolve your issue at the earliest.

Fig. update/upgrade your linux.

Fig. update/upgrade your linux. Fig.Checking java version after installation.

Fig.Checking java version after installation.

Fig.Installing scala.

Fig.Installing scala. Fig.Checking scala version.

Fig.Checking scala version.